In an industry where hacking is so celebrated, I would like today to offer the opposite: a survey of the activity of building software from a humanist and cognitive point of view.

What are Roslyn source generators?

If you haven’t read about source generators, then, in a nutshell, it’s a new extensibility point of the Roslyn compiler that allows you (and any NuGet package) to dynamically emit new C# code and add it to the compilation in progress. Source generators receive an object model representing the code being compiled and, based on that, can emit new code. They cannot, however, modify existing code.

Strictly speaking, Roslyn source generators don’t enable any scenario that was impossible before. The novelty with Roslyn source generators is that they are built into the Roslyn compiler, which gives two advantages. First, the build will be faster because the first stage of the compilation will need to be performed only once instead of twice. Second, since the C# compiler is hosted in the IDE, the code-behind artifacts will be visible at design time in the IDE using tools like IntelliSense.

Another decisive benefit of Roslyn source generators is that they are invented by Microsoft and will therefore benefit from immediate, broad, but also uncritical adoption. We can expect Roslyn source generators to be widely used and abused as soon as they become a stable part of .NET.

When to use source generators?

Microsoft gives a couple of use cases of source generators. Most of them are excellent. Spoiler alert: not all are.

Among justified use cases of source generators, we can list:

- Generation of code-behind files for XAML, ASP.NET, settings, … which is now being done as standalone MSBuild tasks.

- Creation of an “application catalogue” for instance to index ASP.NET controllers, MEF components… which is now typically performed at run-time during application start-up using System.Reflection. It could also have been implemented using a post-compilation tool like PostSharp, but there was no significant attempt to do it.

- Generation of infrastructure code that requires the implementation of methods such as ToString or Equals, GetHashCode, comparison operators… which is now typically implemented using tools like PostSharp.

I believe that in these three classes of use cases, source generators are superior to the technology they replace.

When not to use source generators?

Source code generators are by design additive only, i.e. you can add new files to the compilation (possibly new partial classes), but you cannot modify existing ones. If you’re facing a problem that you would naturally address by editing existing code and that could not be isolated into a partial class, then a source generator is not the right job for the task.

To illustrate this rule, I will take an example directly from Microsoft’s materials, and I will show why it could be considered as an abusive use of this technology.

Let’s look at the code, reproduced from Microsoft’s samples:

public partial class UserClass

{

[AutoNotify]

private bool _boolProp;

[AutoNotify(PropertyName = "Count")]

private int _intProp;

}

The source generator would generate the following partial class:

public partial class UserClass : INotifyPropertyChanged

{

public bool BoolProp

{

get => _boolProp;

set

{

_boolProp = value;

PropertyChanged?.Invoke(this, new PropertyChangedEventArgs("UserBool"));

}

}

public int Count

{

get => _intProp;

set

{

_intProp = value;

PropertyChanged?.Invoke(this, new PropertyChangedEventArgs("Count"));

}

}

public event PropertyChangedEventHandler PropertyChanged;

}

What’s wrong with that?

At first sight, the tool seems to do a good job at avoiding writing the boilerplate. From this angle, source generators look good.

It’s true that this implementation does not support the advanced features proposed by PostSharp like computed properties or dependencies on child objects, but remember that this is only a simple example. Nothing in theory prevents you from performing a complex analysis of the code model and generate smarter code. If you put in work for months, you will eventually be able to implement that.

Another objection may be that you need to remember to assign the property instead of the underlying field, even if the field is what you see in your source code, otherwise the notification would not be raised. This remark could be addressed by emitting an error when you’re trying to directly assign the field.

Therefore, from the point of view of what you can do with it, there’s nothing wrong at source generators in this case of implementing INotifyPropertyChanged. You can do everything you need for this use case. But you must define fields instead of properties, and must remember to set the property and not the field, even if the field and not the property is visible of your source code.

The problem using a source generator to address the decade-old problem of INotifyPropertyChanged is that this solution is not idiomatic.

If you already have a class with properties and need to add INotifyPropertyChanged, you will have to refactor your properties into fields. Your code will look unnatural (breaking the “principle of least surprise”) and that refactoring itself is unproductive. You will have to add the right options to your [AutoNotify] so that it emits accessors with the visibility you need while this problem is already elegantly addressed by the C# language. These are all design smells that show that the solution is not idiomatic.

Implementing INotifyPropertyChanged is a textbook problem for aspect-oriented programming (AOP). Actually, we can say that [AutoNotify] is an aspect – but inelegantly implemented with a source generator.

Unlike source generators, AOP frameworks are specifically designed to add infrastructure concerns into hand-written code, without requiring you to change it. Well-written aspects work with your code, not against it. That is, good aspect-oriented frameworks are idiomatic to their host language.

Source generators are a useful tool, but they don’t replace aspect-oriented programming.

Microsoft’s love-hate relationship with AOP

Microsoft has had a long love-hate relationship with aspect-oriented programming. As all large corporations, Microsoft has the psychology of a dragon with many heads – each with its own eyes, mouth and brain. No wonder they are not always synchronized.

On one side, many teams have added AOP-ish features into their own product:

- Microsoft Transaction Server, later renamed COM+

- Custom behaviors in WCF

- Interceptors in Unity

- Action Filters in ASP.NET MVC and ASP.NET MVC Core

These teams didn’t just add these features because they were fun, but because they were useful. In all these technology stacks, aspects can be applied only at the boundaries of a component because the technology brokers the call between the client and the component. Neither the compiler nor the runtime engine was involved there, only the service host.

Another example of technology that vitally needs aspect-oriented programming is WPF. The amount of code required to implement INotifyPropertyChanged, dependency properties or command is colossal. This is why INotifyPropertyChanged is cited as a use case of source generators. Because it’s so useful.

Last, some Microsoft teams used PostSharp to improve their productivity.

It’s not surprising: aspect-oriented programming is incredibly useful. Our telemetry data suggest that AOP can easily reduce the source code size and complexity by 15%. That means that some users of our product benefit from 1% and should probably stop using it, but other users decrease their code size by 50%. A customer of us confessed saving millions of dollars thanks to PostSharp. This is how big AOP is. Many PostSharp users say to us they want it to be a standard part of .NET. There are several millions of full time C# developers in the world. For every million developers, 150,000 are now writing code that could be generated with better quality by an algorithm. Just think about that huge waste of time.

The problem is that, on the other side, the C# team has really never embraced aspect-oriented programming. It is not even on their feature map.

Egoistically it’s good for us that the language team does not care about AOP, because we can make some (hardly won) money by filling this gap. But for the community, this is a pity.

In the meantime, you can rely on PostSharp if you want to leverage the benefits of the AOP. Frankly, many companies are not willing to take the risk to rely on a small company such as ours for a critical part of their build process – even if we’ve been there for almost 15 years. I’m grateful for the thousands of customers who decided to trust us, but I also understand that, for many of them, the vendor risk is a higher obstacle than the license price.

Therefore, I believe Microsoft’s love-hate relationship with AOP is detrimental to the productivity of the .NET ecosystem – especially to large projects.

Yes, aspect-oriented programming is an old concept and its hyped years are behind us. I’m not a fan of the AspectJ approach and have never tried to copy it into .NET. But the fact is that the AOP addresses an abstraction gap of C# and that this gap itself cannot be ignored. AOP, AspectJ and even PostSharp are just solutions to a problem. The fact that you don’t like the solution does not magically make the problem disappear.

So, how can we approach that problem? What problems are programming languages solving anyway?

What is a programming language?

A programming language is a language. A language used by humans and by humans only. Compilers and interpreters are tools that convert the elements of human speech into instructions that can be executed by a computer. But the language itself is designed for human consumption. Humanity is the most fundamental characteristic of a programming language. It seems paradoxical because programming languages have traditionally been designed by people with a technological background and not, say, in philosophy or cognitive psychology. It’s maybe because languages used to be designed by the same group of people who implemented the compilers which is, definitively, a technological activity.

The larger the abstraction gap between the language and the hardware, the more complex the compiler or interpreter needs to be. During the first decades of computing, the complexity of compilers and interpreters was severely constrained by the limitations of hardware, especially CPU and RAM. In the 1970s, specialized hardware called LISP machines were developed. There were even Java coprocessors in the late 1990s. The C language, with its system of header files, was designed to optimize the memory usage of the compiler. In these pioneering times, programming languages had to remain close to the hardware – i.e. homomorphic to the machine.

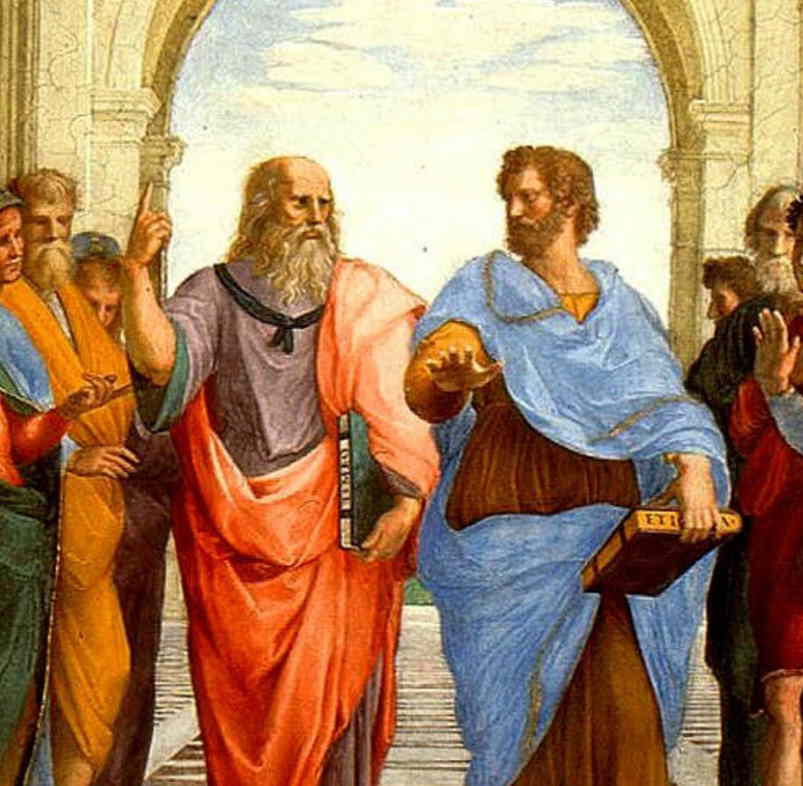

At each generation, the languages became closer to the way humans reason. Object-oriented programming was invented in the 1960s to create a language that would allow for expressing models of the physical world. It became SIMULA. Take the time to think about the concepts of class and inheritance. These concepts are purely irrelevant to hardware. They are however of the upmost importance for human reasoning and have been first theorized, in the Western culture, by Aristotle in the 4th century BC. (Please don’t make me say that Aristotle invented OOP!) We could say that programming languages became more and more logomorphic, if we accept logos in the meaning of reasoning (logic) and not speaking. Successful programming languages mimic the way we think.

However, in today’s world, RAM and compilation time is no longer a constraint. The compiler only consumes a fraction of the total build time. What, then, really prevents programming languages from becoming even more logomorphic? Two things: the lack of investment in compilers (I believe every million dollars invested in the C# language can translate into a billion of productivity gains for the community), and a system of mental habits that internalized constraints that have now become obsolete.

What problem do programming languages and frameworks solve?

The problem developers solve is generally to implement a solution to a business problem – for instance implementing a web site. Implementation can be simple or complex, can require little or more design. Let’s call the implementation problem the first problem. Developers typically focus their attention on the first problem. Developers are intellectual workers; their job is principally to produce, even they may also take many other roles on their job. The realm of developers is composed of source code, development tools, APIs, cloud services, and other software or hardware artifacts.

Development managers, however, have a different problem. Instead of focusing on the first problem, managers need to focus on the activity of developing, i.e. on the team of developers, on the way they cooperate, communicate or conflict, on hiring and firing, on the financing of the team and its relationships with external stakeholders, and so on. The realm of managers is composed of people, organizations and processes. Let’s call that the focus on the social activity of software development the second problem.

In other words, the second problem is what gets revealed when you try to raise your awareness from the technical problem to the activity of solving the technical problem – an activity that is human and social. It’s like mindfulness but applied to software development. A higher level of awareness.

Now, if you look at software development from this higher point of view, ask yourself this question: What are the main constraints the industry is facing? What are its principal challenges? Is it the lack of processing power, of RAM, of storage, of network connectivity, of energy or battery capacity? For most of us, no. I concede all these problems are real, but they are not the real limiting factor. It is not the scarce economic factor that our industry has to cope with.

The real challenge of our industry is the scarcity of cognitive resources. What really limits development teams and companies is the amount of intelligence that they can attract to their projects with their budget. Everybody’s cognitive capacity is finite. Our individual cognitive capacity determines the maximal complexity of the problem we are capable to “load” into our brain and therefore reason about. To cope with this finite capacity, we can attempt to decompose big problems into several smaller problems that can be reasoned about separately (I will see later how it affects the design of programming languages), but individual abilities put a limit on the size of these atomic problems. When the problem we are considering is well within our abilities, we are quick and swift at the task. When it gets closer our limits, our productivity decreases sharply. When complexity excesses our ability, we start simplifying the problem, and as our simplifications become incorrect, we start designing incorrect solutions. The most complex atomic problem a team is able to solve is limited by the capacity of its most intelligent member. The first dimension of the scarcity of cognitive resources is our individual finiteness.

The second dimension is obvious: as a company, the number of people you can hire is limited by your budget. The more brains, the more atomic subproblems you can solve in parallel – supposing you can split your problems in enough independent subproblems that are simple enough to be handled by average team members. This ability to split problems and make social cooperation efficient is in itself a serious challenge that our industry addresses with tools and practices in broad areas: versioned source control, project management, specialization of teams in the company and companies in the industry, analyzing and modeling, and so on. Software development is a human activity; besides its cognitive aspects (working with individual brains), it also has social/political dimensions (organizing the efficient cooperation of many individuals).

Let’s now go back to programming languages and development tools.

If the real challenge of our industry is to deal with the scarcity of cognitive resources in both its individual and collective dimensions, it becomes clear that programming languages should be primarily designed with this challenge in mind. Programming languages should reduce the cognitive load on developers, so developers can do more, and more people can be developers. Programming languages should allow for the higher problem decomposition, so atomic problems become simpler and more individuals can simultaneously cooperate. Finally, programming languages should match the level and mechanisms of abstraction of human reasoning, i.e. should reduce the impedance between human thought and source code.

The best programming language is not necessarily the one that allows to build a given functionality with the smallest number of characters. It is the one that requires the lowest cognitive resources, or the one that allows to best distribute the work across a team of various qualifications.

Patterns and language extensions

Looking at programming as a work with language opens interesting insights, ones you don’t get when you’re stuck at the implementation level. When we change our point of view, when we focus our awareness on something different, we naturally come to different thoughts. On the contrary, when we always stick to implementation details, we’re always in micro-optimization mode and we cannot make conceptual leaps.

We all know the famous Gang of Four’s book Design Patterns: Elements of Reusable Object-Oriented Software, but only a few developers have gone back to the sources and studied the works of the spiritual father of the design pattern theory: Christopher Alexander, born in 1936, an influential architect and design theorist. He surveyed the theoretical foundations of the activity of designing artifacts such as barns or kettles (design as the activity of designing and not the result of this activity). The contribution of the Gang of Four was to apply these principles to software engineering – with the huge success we know. It is regrettable that most software developers now look at this work as a cookbook, now largely obsolete, and skip the theoretical parts. The theoretical foundations of design patterns are still of immense relevance for the design of programming languages and frameworks.

Alexander draws our attention to the fact that every profession has recurring elements of design that he calls patterns. A part of the education of every student of every trade is to study these patterns. For instance, Carpentry for Boys published in 1914 lists more than a hundred of carpentry patterns that all apprentices should learn. Not only should they learn to execute them, but they should also understand in which context they should be used. Patterns are reusable elements of solution to recurrent but non-identical problems.

An essential characteristic of patterns is that they are named. Because of that, they form a professional dialect on the top of the common language. Patterns are not only elements of design, but also elements of language. Because we’re able to talk about them, we’re also able to reason about them.

When carpenters talk of a rabbet join, they don’t need to focus their intelligence on the specific cuts and angles. Their reasoning works at the level of abstraction of the pattern. When we are reasoning in terms of patterns instead of their implementation details, we are working with fewer but larger elements, and therefore our brain, with the same limited capacity, can handle more complex problems, and recursively, in a virtuous spiral that produces a richer and richer collection of reusable solutions.

The development of a trade is therefore always concomitant with the enrichment of its dialect.

How does that translate to programming languages? How can we extend our common language – say C# – so it reflects the expertise we have built in a domain?

First, we are using the tools object-oriented programming gives us. We have named classes to represent concepts, named methods to represents actions on these concepts, and named properties to represent observable characteristics of these concepts. That seems sensible and it is. But it is not sufficient to represent the whole realm of our reasoning.

How do you represent a pattern in C#? Well, you don’t. The C# programming language does not allow you express, in an executable way, such a simple thing as the statement “the class Foo is thread-affine” or “the class Bar is observable”, even if thread affinity and observability are two well-identified patterns of UI development.

There is no way to extend the C# language with concepts of thread affinity, observability or other patterns that may be relevant to a specific niche, industry, or project. Either the pattern is implemented in the language (like event, lock, using, async, enumerators), or it is not. You cannot extend the language yourself.

I see this limitation as an important shortcoming of C#. It is essential that the programming language matches the level of abstraction at which people are reasoning.

There is now a gap between the way developers think and the way they write code. I think it’s crucial that the software development tools industry does more to bridge it.

This is what we have been doing with PostSharp since 2004 – although I acknowledge it took me 10 years to understand what my job was really about. In the same gesture, we seal a brick, make a wall, build a cathedral, and perfect the art of building cathedrals. It’s important to be experts in sealing bricks, but we must not lose the focus on the edifice we’re shaping and the perfection of our trade.

We must not forget that programming today is only accessorily about doing something that a machine can execute, and principally about coping with the limitations of your own mental capacities.

When designing programming languages and frameworks, we must not forget about the cognitive dimension of our work.

Orthogonal problem decomposition

Our brain can only handle a limited number of elements at a time. When we must reason about a problem of hundreds of moving parts, we cannot reason about all parts at the same time. One approach is called divide-and-conquer. We recursively divide the problem into subproblems and implement them as isolated artifacts – organized in a beautiful tree that resembles a botanic taxonomy: projects, namespaces, classes and finally methods. Besides this source code tree, we have a second hierarchy: inheritance., i.e. abstraction of common behaviors. These two hierarchies are how object-oriented programming implement divide-and-conquer.

There’s another technique humans use when designing complex systems: decomposition into several facets that are as independent (orthogonal) as possible. For instance, when an architect designs a house, she does not need to design the thermal and electrical solutions at the same time. These concerns can be addressed at different times, drawn on different layers in the CAD editor. Later on in the project, the interactions between these layers will be validated. In the meantime, thanks to this decomposition, her brain could focus on different subsets of the problem.

Software has also different facets that are partially independent from each other. For instance, security and robustness are two different facets that are almost independent from each other and from the business functionality. However, traditional object-oriented programming, with its separation of concerns into methods, classes, namespaces and projects, does not allow us to express these different facets in different “layers” of the code. All facets need to be merged together in the same classes and methods implementing the business features.

As you may have guessed, aspect-oriented programming was designed to address this shortcoming.

Aspect-oriented programming was not theorized to address the problem of design patterns, probably because both AOP and patterns were theorized at the same time and independently. It is much more powerful to theorize and implement AOP as a tool to build implementation of patterns.

You may not like AOP in the way it was designed almost 20 years ago, with its system of join points and pointcuts. You may not like AspectJ, its canonical implementation. Honestly, I also don’t like them. You may not like PostSharp, especially its reliance on MSIL rewriting. But again, the shortcomings of current implementations don’t magically eradicate the problem they are trying to address. The gap between human reasoning and programming languages remains.

Designing a language extension mechanism in 2020

There are two dogma I would like to avoid.

The first is that we should necessarily follow all principles of aspect-oriented programming as you find them in Wikipedia. We should not. As much as we cannot dismiss the problem addressed by aspect-oriented programming, we have no obligation to follow their canonical implementation strategy. Actually, PostSharp has never been an orthodox aspect-oriented framework. It has never relied on the concepts of join points and pointcuts. Unlike AspectJ aspects, PostSharp aspects have always had access to the code model. This approach was also the one of Code Analysis (FxCop) and now of Roslyn analyzers and source generators. Therefore, we don’t have to accept or reject AOP as a whole. We can pick what we like and ditch what we don’t.

The second and opposite dogma we should avoid is that any mechanism that allows “aspects” to modify code is intrinsically bad. This dogma seems firmly rooted in some Microsoft teams. I’m quoting the introduction blog post of Roslyn source generators:

“Source Generators are a form of metaprogramming, so it’s natural to compare them to similar features in other languages like macros [or compiler plug-ins]. The key difference is that Source Generators don’t allow you _rewrite_ user code. We view this limitation as a significant benefit, since it keeps user code predictable with respect to what it actually does at runtime. “

This misconception against code rewriting is not productive. It is not possible to bridge the abstraction gap without giving aspects (or the compiler plug-in) some way to rewrite code. I agree that it is too dangerous to give the aspects an unlimited and unpoliced ability to rewrite the code. But, as my experience with PostSharp proves, it is possible to give the aspect the ability to apply some well-defined transformations to some well-defined possible target elements of code. These well-defined transformations are:

- intercepting any member (method, property, event, field) – the most important of all;

- wrapping a method in a try/catch/finally;

- adding a new member to a class;

- manipulating custom attributes;

- manipulating managed resources;

- adding new interface implementations.

The key point is to make these transformations safely composable. If several interceptors are added to the same method, the resulting behavior should be predictable, deterministic and correct.

Ironically, even the add-only nature of Roslyn source generators doesn’t make them safe. Two unrelated generators can generate two partial classes of the same name that would conflict with each other – for instance both would try to implement IDisposable and would necessarily conflict to introduce the Dispose method. This scenario is supported in PostSharp since version 2.0 in 2009, when it was fully redesigned to support strong composability. Source generators are a quick and dirty compiler feature. They are useful, but they don’t sustain a long-term engineering vision.

If you want to implement something like AOP (or any mutable metaprogramming) and really solve the abstraction gap, you need two things: read-only access to the source model, and a mechanism to add predefined and safely composable (and therefore sortable) transformations to an existing project. These features can be implemented by the compiler without affecting the C# language. PostSharp is implemented as a post-compiler MSIL rewriter because it was designed at the time where the C# compiler was closed source, but there would be no unsurmountable technical obstacle to implement the same approach straight in the compiler.

Making the source code more predictable in presence of interceptors is a solved problem. Hiding implementation details from the business source code is intentional so displaying the compiler-generated instructions in the source code is actually not desirable. Developers definitively need to know that a method is cached, but they don’t need to know how it is being cached – until they really want to focus on this aspect. You can visualize the presence of an aspect on a method using code editor enhancements like CodeLens, icons, underlining, and tooltips.

Adapting the debugging experience to the presence of aspects is also essential but, again, that problem is already partially solved. In PostSharp, you have two modes when you step into a method call: step over aspects and go directly to the business code, or step into the aspect. This experience could be further improved by visualizing, at debugging time, the list of interceptors present on the method, and offering to step into one of those. There is no real conceptual problem there, yet the implementation of our debugger add-in was tremendously difficult.

There is no unsurmountable obstacle to bridging the abstraction gap in C# while maintaining a strongly predictable execution model.

Summary

The preview release of Roslyn source generators reopened the debate around meta-programming and aspect-oriented programming. As often in our industry, we tended to be very technical at the risk of mentally confining ourselves to implementation details. By contrast, this essay was an attempt to contemplate the cognitive aspects of programming.

Hacking is fun and can get things done quickly, but hacking does not scale. Reducing the number of characters needed to implement a feature is a noble goal, but not if it makes it more cognitively demanding to maintain the source code.

What really limits the software development industry is the scarcity of cognitive resources – either the limited supply of intelligent people, or the finiteness of human intelligence itself. Since we cannot increase our intelligence, we must reduce complexity.

In this struggle for simplicity, programming languages have a crucial role to play. As languages, they define but also limit what we can express with them and even how we reason. There are unfortunately still things that we are able to think but unable to express in C#: for instance the implementation of patterns or decomposition of orthogonal concerns. I call that the language abstraction gap.

Aspect-oriented programming was an attempt, 20 years ago, to address that abstraction gap. You may not like its canonic implementation in AspectJ or the MSIL rewriting that PostSharp is doing, but just dismissing solutions doesn’t magically address the need.

The fears of Microsoft’s language teams regarding code rewriting are largely unfounded. Yes, designing a well-engineered framework for code rewriting is a complex and expensive project, but a feasible one.

A lot of experience has been accumulated in the last decade around the design of aspect-oriented frameworks, and about the visualization and debugging of code enhanced with aspects. It has become a mature art. I’m proud that PostSharp contributed to it.